| Hadoop JobHistory | 您所在的位置:网站首页 › job history翻译 › Hadoop JobHistory |

Hadoop JobHistory

|

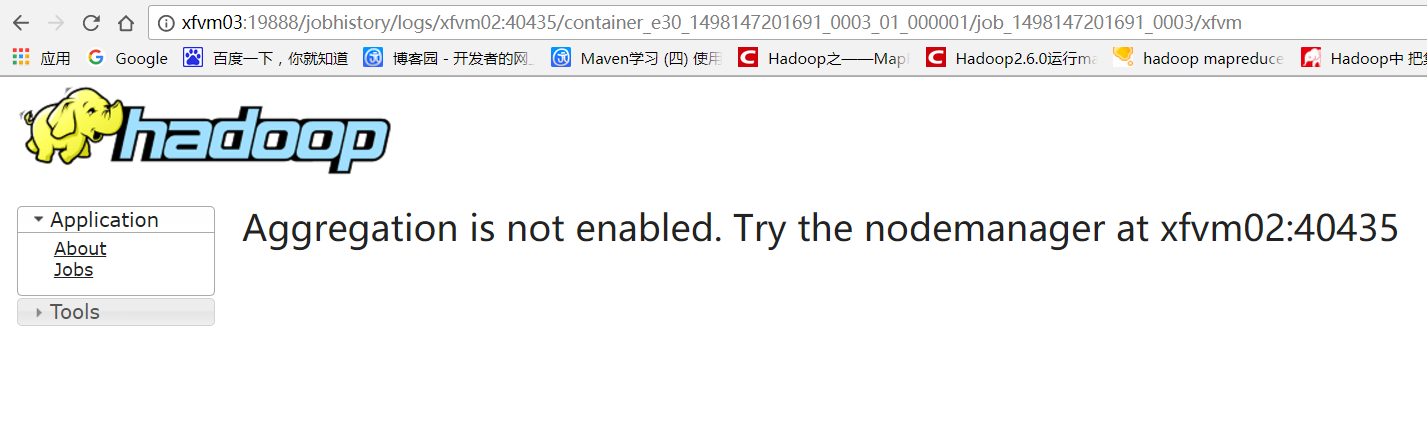

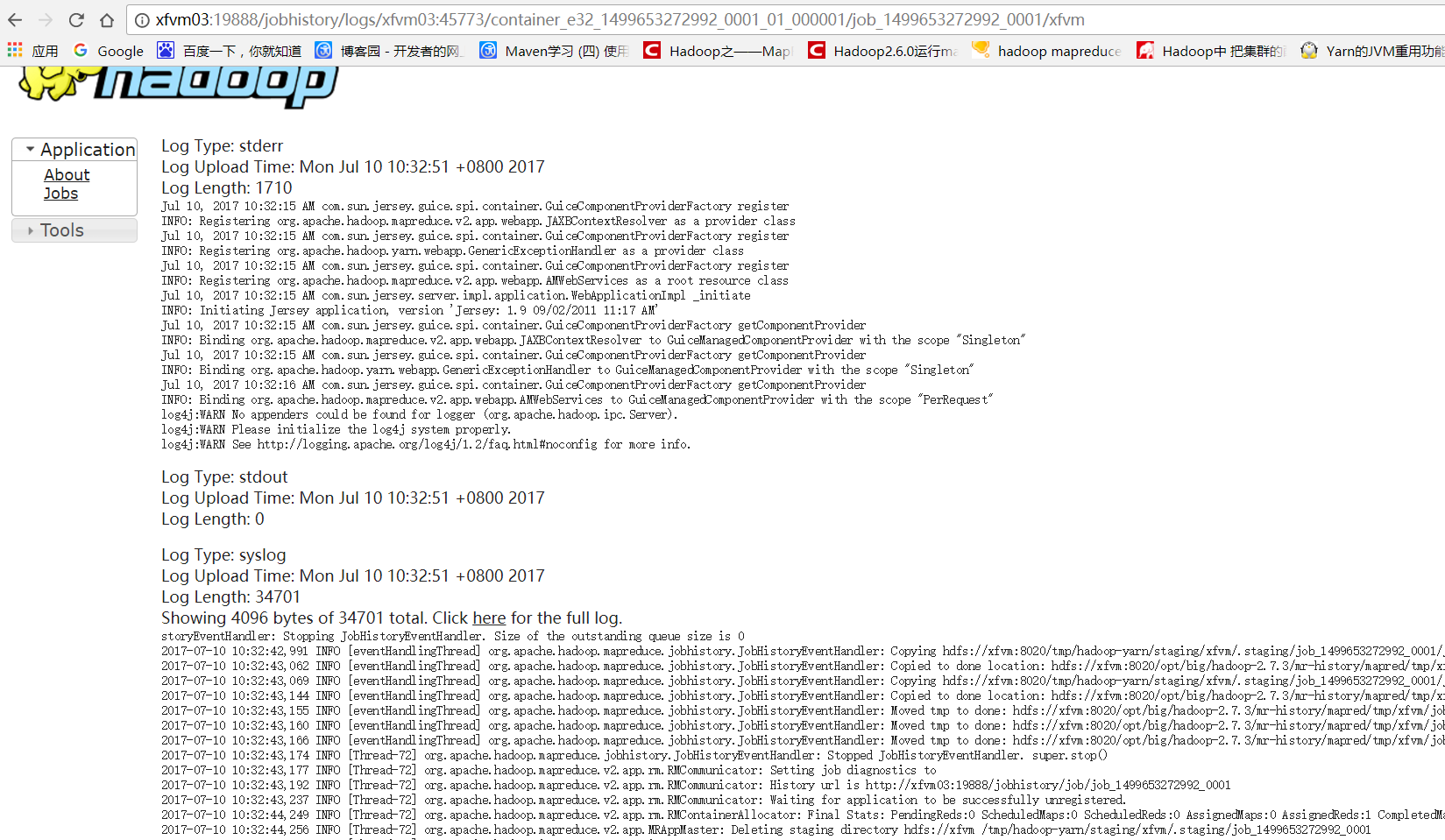

JobHistory用来记录已经finished的mapreduce运行日志,日志信息存放于HDFS目录中,默认情况下没有开启此功能,需要在mapred-site.xml中配置并手动启动。 1、mapred-site.xml配置信息: mapreduce.framework.name yarn 指定运行mapreduce的环境是yarn,与hadoop1截然不同的地方 mapreduce.jobhistory.address xfvm03:10020 MR JobHistory Server管理的日志的存放位置 mapreduce.jobhistory.webapp.address xfvm03:19888 查看历史服务器已经运行完的Mapreduce作业记录的web地址,需要启动该服务才行 mapreduce.jobhistory.done-dir /opt/big/hadoop-2.7.3/mr-history/done MR JobHistory Server管理的日志的存放位置,默认:/mr-history/done mapreduce.jobhistory.intermediate-done-dir /opt/big/hadoop-2.7.3/mr-history/mapred/tmp MapReduce作业产生的日志存放位置,默认值:/mr-history/tmp mapreduce.map.memory.mb 1024 每个Map任务的物理内存限制 mapreduce.reduce.memory.mb 2048 每个Reduce任务的物理内存限制2、启动 jobhistory server [xfvm@xfvm03 ~]$ mr-jobhistory-daemon.sh start historyserver 问题记录:

3、问题解决 在yarn-site.xml文件中增加如下属性,并重启jobhistory server yarn.log-aggregation-enable true

4、yarn-site.xml完整配置如下: yarn.resourcemanager.ha.enabled true yarn.resourcemanager.cluster-id xfvm-yarn yarn.resourcemanager.ha.rm-ids rm1,rm2 yarn.nodemanager.aux-services mapreduce_shuffle 默认 yarn.resourcemanager.hostname.rm1 xfvm01 yarn.resourcemanager.hostname.rm2 xfvm02 yarn.resourcemanager.recovery.enabled true yarn.resourcemanager.store.class org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore yarn.resourcemanager.zk-address xfvm02:2181,xfvm03:2181,xfvm04:2181 yarn.nodemanager.aux-services.mapreduce.shuffle.class org.apache.hadoop.mapred.ShuffleHandler yarn.nodemanager.resource.memory-mb 2048 该值配置小于1024时,NM是无法启动的!会报错:NodeManager from slavenode2 doesn't satisfy minimum allocations, Sending SHUTDOWN signal to the NodeManager. yarn.scheduler.minimum-allocation-mb 512 单个任务可申请最少内存,默认1024MB yarn.scheduler.maximum-allocation-mb 2048 单个任务可申请最大内存,默认8192MB yarn.log-aggregation-enable true yarn.log-aggregation.retain-seconds 259200 yarn.resourcemanager.connect.retry-interval.ms 2000 yarn.resourcemanager.ha.automatic-failover.enabled true yarn.resourcemanager.ha.id rm1 If we want to launch more than one RM in single node, we need this configuration yarn.resourcemanager.zk-state-store.address xfvm02:2181,xfvm03:2181,xfvm04:2181 yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms 5000 yarn.resourcemanager.address.rm1 xfvm01:8132 yarn.resourcemanager.scheduler.address.rm1 xfvm01:8130 yarn.resourcemanager.webapp.address.rm1 xfvm01:8188 yarn.resourcemanager.resource-tracker.address.rm1 xfvm01:8131 yarn.resourcemanager.admin.address.rm1 xfvm01:8033 yarn.resourcemanager.ha.admin.address.rm1 xfvm01:23142 yarn.resourcemanager.address.rm2 xfvm02:8132 yarn.resourcemanager.scheduler.address.rm2 xfvm02:8130 yarn.resourcemanager.webapp.address.rm2 xfvm02:8188 yarn.resourcemanager.resource-tracker.address.rm2 xfvm02:8131 yarn.resourcemanager.admin.address.rm2 xfvm02:8033 yarn.resourcemanager.ha.admin.address.rm2 xfvm02:23142 yarn.nodemanager.local-dirs /opt/big/hadoop-2.7.3/yarn/local yarn.nodemanager.log-dirs /opt/big/hadoop-2.7.3/yarn/logs mapreduce.shuffle.port 23080 yarn.client.failover-proxy-provider org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider yarn.resourcemanager.ha.automatic-failover.zk-base-path /yarn-leader-election Optional setting. The default value is /yarn-leader-election

|

【本文地址】

公司简介

联系我们